“Dramatic advances in artificial intelligence are opening up a range of exciting new applications. With these newfound powers comes increased responsibility.”

–DEMIS HASSABIS, SHANE LEGG and MUSTAFA SULEYMAN, co-founders of DeepMind Technologies

Last week, I had the good fortune of hearing two thought-leaders speak at separate events. One gentleman I listened to is a Corporate Vice President (CVP) at Microsoft and is leading the technology giant into its next phase of growth and development. The other gentleman was John James, a very impressive 37-year old running for US Senate in the state of Michigan. While the events and men were inherently different, both messages revolved around the importance of advancing technology thoughtfully – one at a corporate and the other at a government level.

The messages struck a particular chord given the topic that we presented in last week’s Evergreen Virtual Advisor. One question that the Microsoft CVP fielded was, “what technology will have the biggest impact over the next 10 years?” His response was immediate: Artificial Intelligence (and then proceeded to give some very interesting examples on where he sees the technology heading). At the political event, John James took to the podium and preached, unprompted, about the importance of controlling technologies linked to Artificial Intelligence (AI), especially as it relates to China and Russia.

Interestingly, and not unexpectedly for those with knowledge of the space, both men also provided warnings around the potential risks of not advancing AI-tech responsibly. And they’re far from alone in their concern.

Several prominent technologists and visionaries have shared similar messages publicly. Specifically, the late Stephen Hawking, the great Bill Gates, the embattled Elon Musk, the also-presently-embattled Steve Wozniak (Apple co-founder), and many other big names in science and technology have discussed or raised fears around the implications of a future where Artificial Intelligence plays a more significant role.

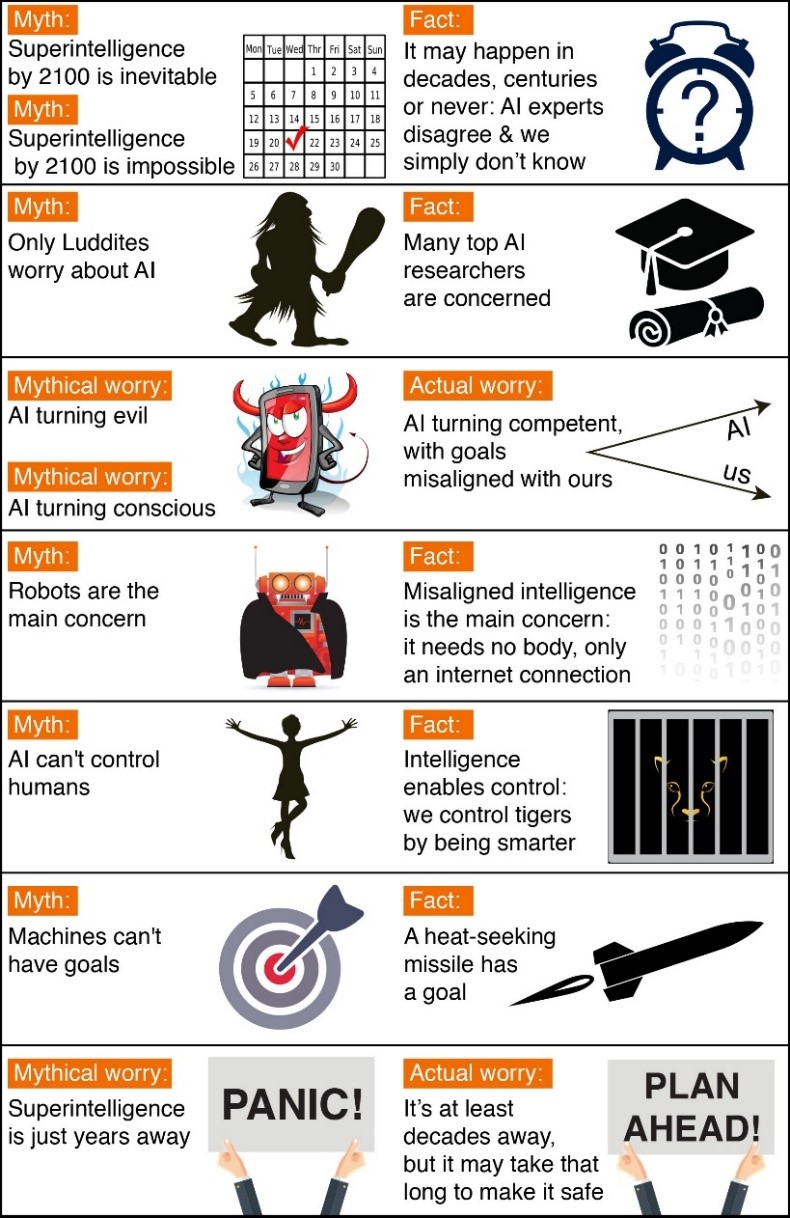

To be clear, when discussing the potential risks or concerns around AI, it is important to distinguish fact from fiction. As the following chart illustrates, there are several myths that have been propagated through popular culture and science fiction that are outside the realm of this discussion.

Source: Futureoflife.org

Source: Futureoflife.org

Superintelligence (the theory that if machine brains surpass human brains in general intelligence, then “super-intelligent machines” could replace humans as the dominant lifeform on Earth) and technological singularity (the hypothesis that the invention of artificial superintelligence—ASI—will abruptly trigger runaway technological growth, resulting in unfathomable changes to human civilization) are both valid theories with many supporters, and should undoubtably be part of any conversation surrounding thoughtful AI advancement. Bill Gates wrote on the subject in a recent “Ask Me Anything” session on Reddit:

“I am in the camp that is concerned about superintelligence. First, machines will do a lot of jobs for us and not be super intelligent. That should be positive if we manage it well. A few decades after that though the intelligence is strong enough to be a concern. I agree with Elon Musk and some others on this and don’t understand why some people are not concerned.”

Despite these existential red flags, for the sake of this discussion we are going to avoid a deep dive on the subjects of superintelligence and singularity and focus on an additional risk that most researchers and experts agree exist: that AI will be programmed to do something destructive.

Programmed for Destruction

As discussed in several past EVAs, one of the primary objectives of President Trump’s war on trade is to limit China’s ability to gain access to intellectual property (IP) and technology that could be used against the US and its allies (whoever they are these days) – either by way of a physical war or cyber war. Artificial Intelligence-related tech is central to those fears.

The concern is that autonomous weapons could be used malevolently in the hands of the wrong country, person or group of people. These machines could theoretically make “intelligent decisions” based on underlying code and continue to operate without human input, creating a vacuum in which machines programmed to complete a sinister objective cannot be easily “turned off”.

If this sounds like the stuff of science fiction, the present-day dangers that exist in this arena are very real and are the subject of various economic, political, national security and trade discourse.

An Intelligent Dilemma

In 1899, the world’s predominant nations signed a treaty banning the use of aircrafts for military purposes. Five years later, the treaty was allowed to expire and countries participating in World War I made quick use of the “flying birds” to gain an aerial advantage. Decades later, the proliferation of nuclear weapons among a few key players prompted a race to build and harness a new powerful, destructive weapon for a military edge. Luckily, to this point, the use of nuclear weapons has been largely absent from the throes of war.

Where the development of Artificial Intelligence differs from aircrafts and nuclear weapons, is in its cost and abundance of resources. Code and digital data tend to be relatively inexpensive and can be spread around fast and for free in many cases (i.e. open source). So, how are we to think about the risks associated with Artificial Intelligence and its very relevant, real-world application for global harmony?

To answer, I will redirect you to an ominous excerpt from a 2015 letter that was signed by many of the visionaries mentioned on page 1, including a host of other technologists:

“If any major military power pushes ahead with AI weapon development, a global arms race is virtually inevitable, and the endpoint of this technological trajectory is obvious: autonomous weapons will become the Kalashnikovs of tomorrow. Unlike nuclear weapons, they require no costly or hard-to-obtain raw materials, so they will become ubiquitous and cheap for all significant military powers to mass-produce.”

Over three years and one global trade war later, the possibility of this scenario has turned from a fringe and ahead-of-its-time concern, to a mainstream and relevant one. As AI continues to advance at a dizzying pace, the real-world applications of AI-related technologies have also increased – and so have concerns about living in a world inundated by intelligent machines capable of performing specific tasks.

The dilemma facing lawmakers and leaders today is how developments in Artificial Intelligence will be thoughtfully monitored at a national and global level to protect the interests of man- and womankind, while also allowing enough freedom for citizens, corporations, and governments to leverage the new and rapidly advancing technology to increase efficiencies and generate added value.

While there are no easy or glaringly obvious answers to this dilemma, Harvard’s Belfer Center for Science and International Affairs produced a report in 2017 recommending that the National Security Council, DoD, and State Department start studying what internationally agreed-on limits should be imposed on AI. Some of the efforts to find answers to those questions are already underway globally. In April, the European Union (EU) signed a Declaration of Cooperation exhibiting a will to join forces and engage in a European approach to deal with AI advancement.

Obviously, the concern is less among global “good actors” that are willing to come to the table for a discussion, than it is among “bad actors” who will use the technology to achieve a specific end goal regardless of what accords, treaties or resolutions are signed. This week’s news that Chinese government officials embedded malicious microchips on computer servers headed to the U.S. in 2014 and 2015 underscores the difficulty of getting all global players to work towards a common goal. Nevertheless, efforts should be made for governments and corporations to align on a global vision for responsible AI-advancement.

Call me naïve that such global discourse is even possible, but human lives may depend on it.

Michael Johnston

Tech Contributor

To contact Michael, email:

mjohnston@evergreengavekal.com

OUR CURRENT LIKES AND DISLIKES

Changes highlighted in bold.

LIKE *

* Some EVA readers have questioned why Evergreen has as many ‘Likes’ as it does in light of our concerns about severe overvaluation in most US stocks and growing evidence that Bubble 3.0 is deflating. Consequently, it’s important to point out that Evergreen has most of its clients at about one-half of their equity target.

NEUTRAL

DISLIKE

* Credit spreads are the difference between non-government bond interest rates and treasury yields.

** Due to recent weakness, certain BB issues look attractive.

DISCLOSURE: This material has been prepared or is distributed solely for informational purposes only and is not a solicitation or an offer to buy any security or instrument or to participate in any trading strategy. Any opinions, recommendations, and assumptions included in this presentation are based upon current market conditions, reflect our judgment as of the date of this presentation, and are subject to change. Past performance is no guarantee of future results. All investments involve risk including the loss of principal. All material presented is compiled from sources believed to be reliable, but accuracy cannot be guaranteed and Evergreen makes no representation as to its accuracy or completeness. Securities highlighted or discussed in this communication are mentioned for illustrative purposes only and are not a recommendation for these securities. Evergreen actively manages client portfolios and securities discussed in this communication may or may not be held in such portfolios at any given time.